This week saw some basic feasibility work, and establishment of some “infrastructure” to support the project, going forward.

As I have stated before, I prefer to use a “make it up as I go along” project planning methodology. The less structure, the better. I consider overdeveloped structure to be “concrete galoshes.“

With that in mind, the first thing that I did, was to create my “napkin sketch” of the project.

I decided to approach the project in four “phases.” I have no previous experience with ffmpeg, but I have done a bit of video work before, and have experience (minimal) with VLCKit. I expect to encounter lots of problems, and have to crack open the books and don my thinking cap.

In “Phase 0,” I simply wanted to reproduce Martin Riedl’s results, using my own RTSP streams, and using the command-line, “regular” ffmpeg on my Mac.

This has already been done, at the time of the writing of this entry.

It’s quite possible to do most of this work without writing a line of code. I could do it by setting up scripts and using command-line utilities, but that would require that every time the system is set up, a great deal of really finicky work would need to be done at a fairly deep level. I’ve worked with setups like that before, and it’s not a good thing to do to customers.

I wanted to write a simple, single application that could be easily installed, and simply started up, without having to deal with much, in the way of setup. I want as much “one-click” as possible.

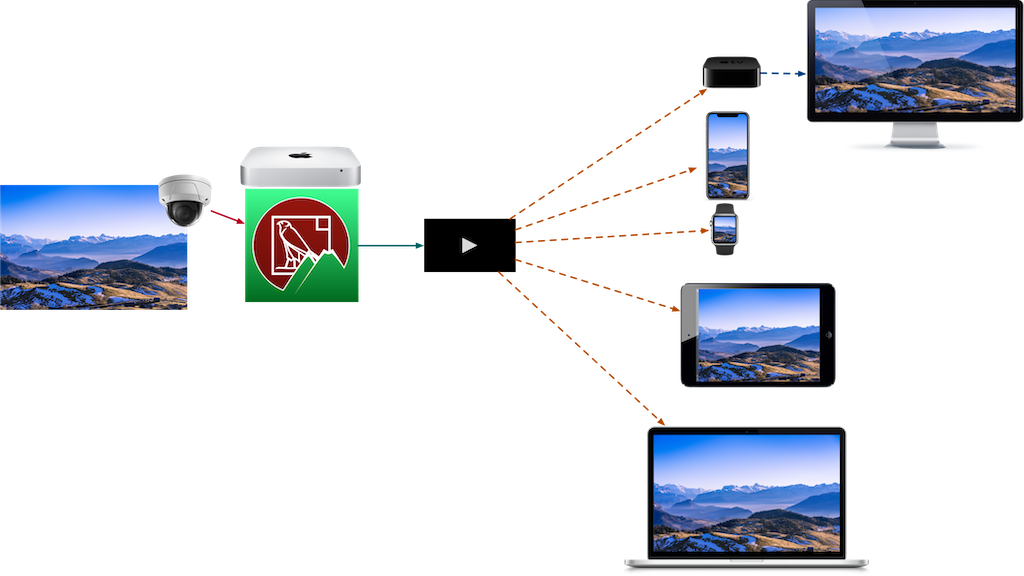

PHASE 1

In Phase 1, I wanted to get the same basic thing going, but in an application on the Mac:

My idea is to run the application as a server, on a MacOS device (like maybe a Mini). This would have a Webserver (I will look at having the application provide the Webserver, as opposed to using the built-in one, or running something like MAMP).

At the time of the writing of this entry, Phase 1 is about 75% done. It’s still quite rough, but the translation is happening, and HLS is being provided.

Apple devices, which can natively play HLS format, could then connect to the server, and see the HLS-translated video stream.

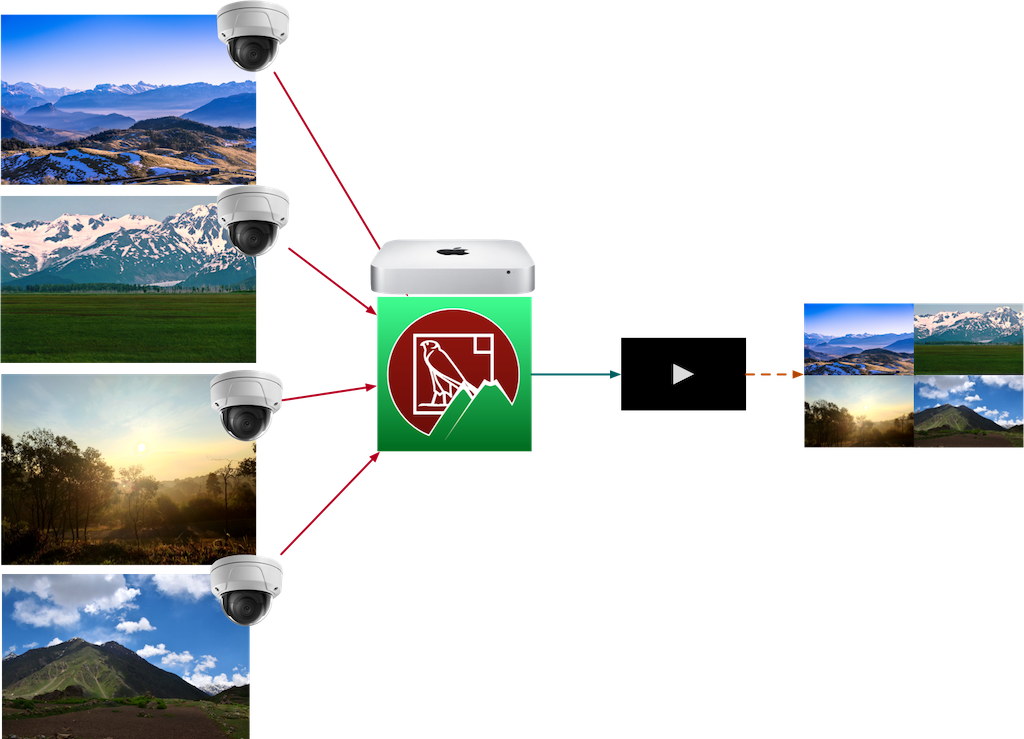

PHASE 2

In Phase 2, I would increase the number of video streams coming in (and the variety, as well). I would aggregate them into a single “thumbnail” HLS stream:

At this point, I expect to start seeing some resource contention issues, and it will be important for me to learn up on the way to most effectively leverage ffmpeg’s configuration.

It should be noted that I will be using the ONVIF versions of RTSP streams from the devices. This means that they may not be so “well-behaved.” Device manufacturers are basically being dragged, kicking and screaming, into the ONVIF world. I expect things to improve substantially over the next couple of years, but right now, it’s a bit “wild west.”

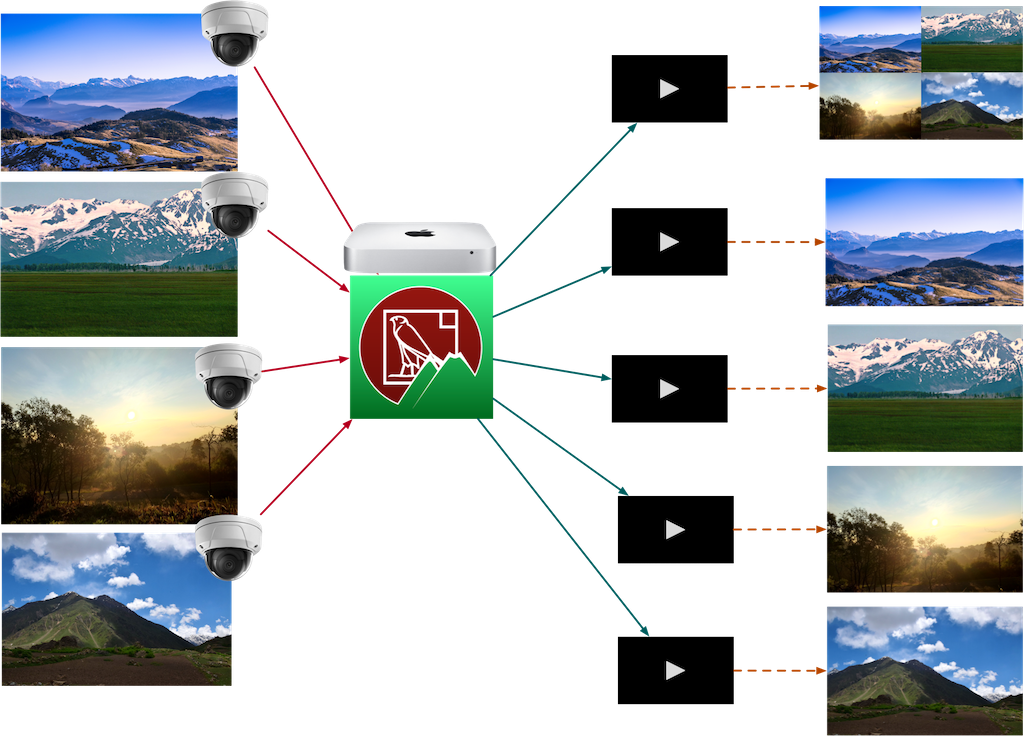

PHASE 3

In Phase 3, things start getting interesting. This is when I start creating individually-served HLS streams:

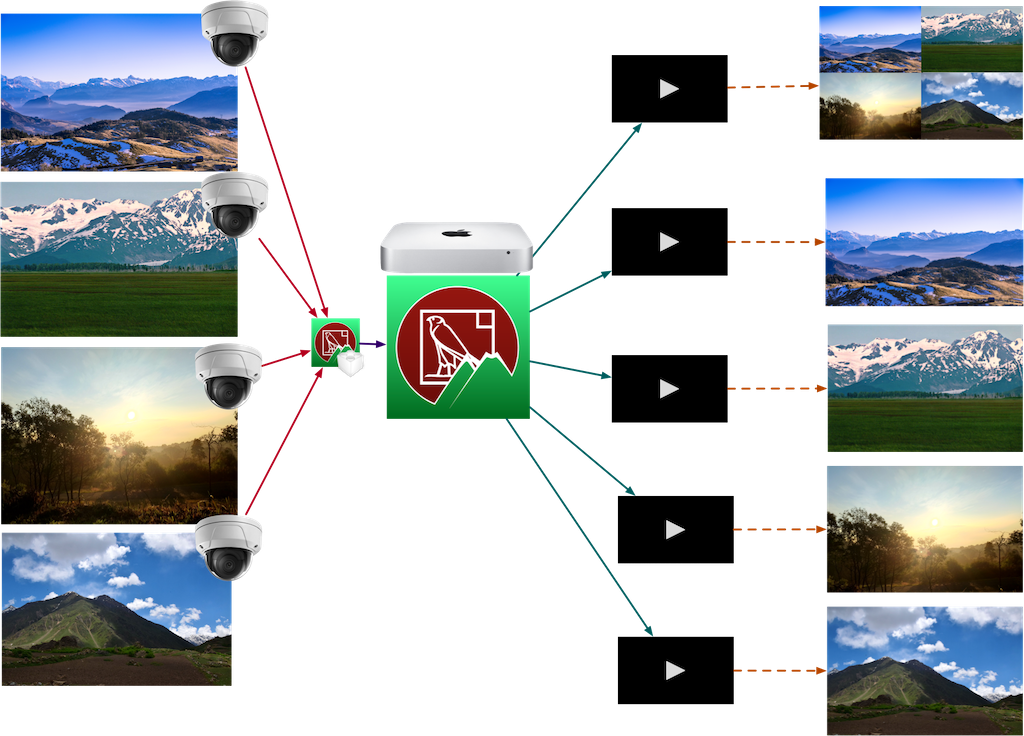

PHASE 4

In Phase 4, I will insert my ONVIF driver into the input to the converter:

And finally, in Phase 5, I will provide some kind of “control” features to the individualized streams. I’m not yet sure of how I will do this. It’s likely to involve some kind of API.

I initially wanted to avoid a solution like this, as I hate having to rely on servers. The object of my ONVIF work was to provide a direct, 1-to-1 relationship between the end user and the surveillance device.

That said, it’s not a huge loss. The current way that surveillance systems are done is through the use of NVRs, which are dedicated servers. We could consider the streaming server a “software NVR,” albeit fairly simple and specialized.

THE CHALLENGES

I foresee the biggest challenges will be ensuring good performance, and low latency.

I’m going to need to learn a lot about video programming. I’ve worked on this stuff before, and I know that it’s non-trivial. However, ffmpeg is an awesome resource that I didn’t have before, so I think that we might get somewhere.

Additionally, even though I have been writing iOS apps for a long time, I haven’t shipped any MacOS apps for about twenty years. I’ll be learning up on Cocoa and the app development process as I go along.

THE VARIABLES

LICENSING

I’ve already decided to use ffmpeg as the video streaming/converting library.

That means that I need to consider licensing. ffmpeg is a GPL 2.1-licensed library, and some of the components can be licensed as GPL Version 2. As I am only using this as an “interim” part of the work I’m doing, I have no issue making the entire project GPL Version 2.

That means that I will be writing it as a completely open-source project. The repo is here. It will be updated, probably several times a day, as I proceed on the project.

“SHIP-READY”

It will also be a “ship-quality” project. It won’t be one of my test harnesses or experiments, where I can be a bit more casual about code quality than I usually am. I plan to make sure that it is tested thoroughly, and well-documented.

THE WEBSERVER

I wanted to find a decent Webserver. I have a couple of choices:

- I can use the built-in Apache Webserver (supplied by MacOS X), or use a third-party tool, like MAMP.

- I can provide my own Webserver, built into the app. This would require implementing and licensing a package to be included as a dependency.

Using the built-in Webserver is a non-starter. Even though it would probably give the best performance and flexibility, it would be too complex to expect integrators or users to set up. I would need to have the application be as “black box” as possible.

I’m not thrilled with dependencies, in general, but they are a necessary evil; especially if you are a “lone wolf” programmer, like me, and want to do stuff that has relevance in today’s IT world.

Selecting dependencies is a serious job. I feel that most folks, these days, are a bit “flippant” about it, but you need to remember that, once you have selected a dependency, you “marry” it. Depending on its nature, you could be setting yourself up for a very painful process in the future, if you choose poorly.

Not just retooling to fit a different dependency, but also quality and security are major factors.

ffmpeg is a “no-brainer.” If I can use it, I will.

The Webserver is a different matter. I have choices.

After poking around, I decided to use the GCDWebserver library. It’s a well-supported, performant and flexible library, with favorable licensing terms, that looks like it will fit into my workflow.

Onward and upward…