Now that I know (pretty much) where I’m going, I can start to set things up.

Here’s the GitHub Pages for the project.

I will be writing the server in Swift. It’s the current “must have” language for Apple development, and I’ve been using it for the last five years. It’s a great language, and I’m happy to be working in it.

PREPARE THE DEVELOPMENT ENVIRONMENT

I will be using Apple Xcode as my development environment. It’s the IDE that Apple provides to developers. It’s free, and quite powerful. I’ve been using it for a long time, and there’s really no argument not to use it (for me).

I also tend to be quite strict about my code quality, so I do a couple of things:

- I use SwiftLint

- I use an xcconfig file that has a bunch of warnings turned on, and treated as errors. This is the file.

I also have a number of utilities that I like to leverage to do some of the more basic things in my coding, like dealing with persistent prefs.

So I created a basic project, set up licensing for GPL 2, and established the various basics that I like to have in most of my projects.

This would be a Mac App, so set up a very basic Mac app target.

TESTING

I don’t practice “pure” TDD, but I like many of its tenets. I’m totally obsessive about quality. I set up a basic test target, leaving it empty, for now. There’s a lot of stuff in this app that will not be testable with standard XCTests, but there’s also a lot of stuff that should be testable.

GETTING JAZZY

I set up a Jazzy file. I want to start generating documentation as soon as possible. I like documentation that grows up with the code.

DEPENDENCIES

I needed to figure out how to include my dependencies. I have three (currently):

- ffmpeg

- GCDWebserver

- Swiftlint

Jazzy is sort of a special case. It isn’t really a dependency. It’s a utility that I add to my system, and call from the development environment directory, but not as part of the build process.

SwiftLint pretty much requires that I use CocoaPods. I don’t like using CocoaPods for shipping software. I won’t go into why I feel that way, but SwiftLint isn’t something that I ship with the app. It’s used at build time to validate the code.

So I set up a podfile with SwiftLint applied to my application target, install and set it up, then clean up the CocoaPods cruft that is added to my project. I add the SwiftLint step to the build phases.

GIT SUBMODULES

Even though I detest Git Submodules, I decided to use them for the two main dependencies. First, ffmpeg is a completely cross-platform system, and doesn’t have any “special setup” for Mac. I can’t use a “clean” dependency manager, like Carthage, for it. I have to have the entire project tree on my computer, so I can do a traditional UNIX make on it.

For GCDWebServer, I needed to make a couple of very minor changes to the project in order to allow it to build properly in my machine, so I needed to fork it. It’s best to use a submodule for that kind of thing.

The good thing about submodules, is that you can pin an exact version to a project, so even if HEAD keeps going, you have the exact set of code you need to reproduce your build.

GCDWebServer

For GCDWebserver, I need to make a couple of extremely minor project changes to make it build the way I want. These are not ones that I want to send in to the main project. So I forked the project here, and included that as a submodule. It also gives me a little bit more control over what is going into my project.

I have control issues…

I add the GCDWebserver project file (It’s Xcode) into my workspace, and build it. I’m only interested in the MacOS variant (it also builds iOS and tvOS).

I include it as an embedded framework. I’ll be directly calling its methods.

ffmpeg

ffmpeg is not a GitHub resource. They run their own repo. I clone the repo as a submodule of my project, and set up the 4.2 branch as the origin.

I decided that I will include the 4.2 release branch of ffmpeg as a submodule. That gives me the latest release, and I don’t have to keep rebuilding every day, like I would if I used the mainline.

ffmpeg, on the other hand, will be used as a system utility, where I’ll call it in a shell process that will be assigned for each instance. This is a slightly different usage.

I do not want to rely on installing ffmpeg on the system, for basically the same reason that I don’t want to use Apache. I need to embed it into the app.

So I set up an aggregate target in my project to build ffmpeg. I use a custom configure, so it will have only the libraries I want (this may change, over time, as I throw more cameras at it):

#!/bin/sh

CWD="$(pwd)"

cd "${PROJECT_DIR}/ffmpeg-src"

./configure --enable-gpl --enable-libx264 --enable-libx265 --enable-appkit --enable-avfoundation --enable-coreimage --enable-audiotoolbox

make

cd "${CWD}"

That will build the project, with H.264, H.265, as well as the various default handlers (like Motion JPEG). I enable a few flags for basic Apple stuff.

I don’t make the aggregate target a dependency of the main application target. It takes too damn long to build ffmpeg. I shouldn’t need to build it too often.

I’ll likely be doing a lot of playing with the configuration, as the project proceeds.

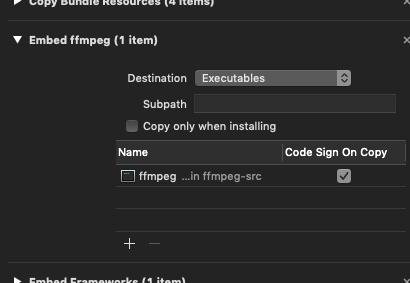

I then take the resulting executable from that project, and embed it in the executable directory:

I will need to call it as a shell, instead of linking it into the app. Here’s how I do that:

/* ################################################################## */

/**

This starts the ffmpeg task.

- returns: True, if the task launched successfully.

*/

func startFFMpeg() -> Bool {

ffmpegTask = Process()

// First, we make sure that we got a Process. It's a conditional init.

if let ffmpegTask = ffmpegTask {

// Next, set up a tempdir for the stream files.

if let tmp = try? TemporaryFile(creatingTempDirectoryForFilename: "stream.m3u8") {

outputTmpFile = tmp

// Fetch the executable path from the bundle. We have our copy of ffmpeg in there with the app.

if var executablePath = (Bundle.main.executablePath as NSString?)?.deletingLastPathComponent {

executablePath += "/ffmpeg"

ffmpegTask.launchPath = executablePath

ffmpegTask.arguments = [

"-i", prefs.input_uri,

"-sc_threshold", "0",

"-f", "hls",

"-hls_flags", "delete_segments",

"-hls_time", "4",

outputTmpFile?.fileURL.path ?? ""

]

#if DEBUG

if let args = ffmpegTask.arguments, 1 < args.count {

let path = ([executablePath] + args).joined(separator: " ")

print("\n----\n\(String(describing: path))")

}

#endif

// Launch the task

ffmpegTask.launch()

#if DEBUG

print("\n----\n")

#endif

return ffmpegTask.isRunning

}

}

}

return false

}

APP STRUCTURE

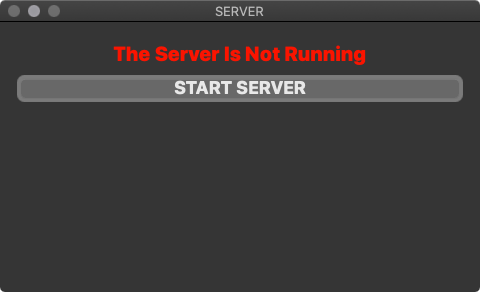

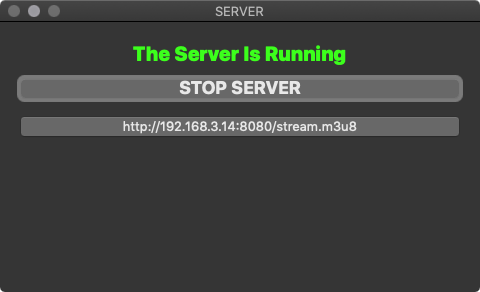

I set up the app to have a couple of windows:

- A regular screen that displays the application server status

- A "preferences" screen that allows me to tweak various settings.

Since Phase 1 is all about getting a single stream to work, I don't want to spend too much time setting up a complicated UX for managing multiple streams.

However, I want to make sure that I don't do anything this early that precludes it.

PERSISTENT PREFERENCES

I will use the Apple Bundle to store my persistent preferences. This is a classic for me. I do it in most of my apps. Each time, I do a bit of "copy/pasta" to set it up. This time, I wanted to give it a bit more "lasting power," so I split up the functionality between a generic storage facility, and the specialized class for the media server app.

I deliberately designed it so that I can have multiple copies of the class, so when I go into the next phase, I won't need to rip out much wiring.

Also, down the road, I will probably be using the Apple Keychain to store the passwords for the streams. The specialized class will allow me to do that.

I also wrote some tests for the persistent prefs. They will be an important infrastructure component, and their implementation needs to be rock-solid.

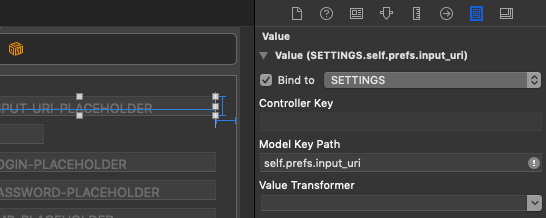

I also did one more thing with the prefs. I made them Key-Value Observable. This means that I'll be able to connect them directly to the user interface elements in the preferences screen, and that they will scale nicely for SwiftUI, in the future.

This is the class that implements the KVO pattern. You can see it in action if you look at the storyboard file. I link the controls in the preferences screen directly to the prefs calculated properties:

The calculated properties immediately update the persistent (bundle) prefs. The result is that as soon as you change one of the values in the Preferences screen, it is immediately changed in persistent app storage. No code needed.

LOCALIZATION

Of course, you never EVER write strings to UI without localizing them, do you? I have a couple of tricks that I use to make localization a "no brainer." I -of course- use a Localizable.strings file. This contains all the displayed strings, keyed by text slugs. I make it obvious that the slugs are "slugs," by making them ugly, all-caps, and prefixed by "SLUG-".

I also have a trick that I use, where I add an extension to the StringProtocol data type, where I add a calculated property, called "localizedVariant". This will perform the lookup via the localization file for you:

/* ################################################################## */

/**

- returns: the localized string (main bundle) for this string.

*/

var localizedVariant: String {

return NSLocalizedString(String(self), comment: "") // Need to force self into a String.

}

As I was working on the implementation, I discovered that ffmpeg doesn’t like the sandbox. It reaches out for libSDL in a certain place in the Mac.

This seems a bit nuts, so I figure that it’s probably something that I’m not doing right. I’ll try to figure out why that’s happening.

Another thing that I ran into, was that I need to set up temporary directories, where ffmpeg dumps its files into, and GCDWebserver picks them up.

I decided to use Ole Begemann’s excellent Temporary Directory utility. He’s quite good, and his implementations tend to be about as efficient and effective as you can get.

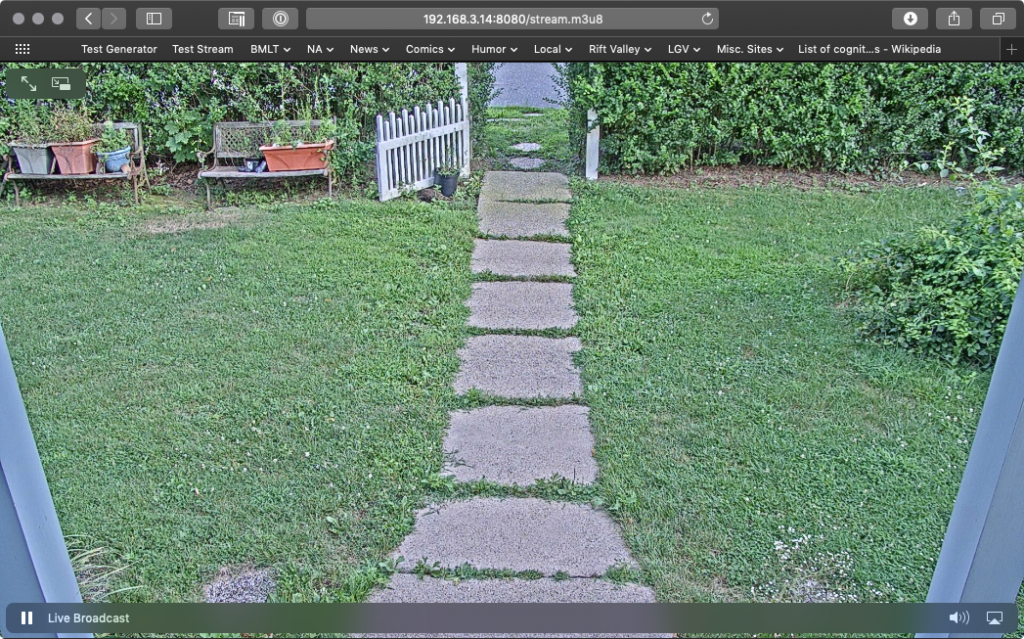

I then set up the screens, tied everything together, and got the bare essentials working, where I am reading in one RTSP stream, converting it to HLS, and serving it from the application.

VOILA

Server Not Running |

Bottom button Opens the Browser |

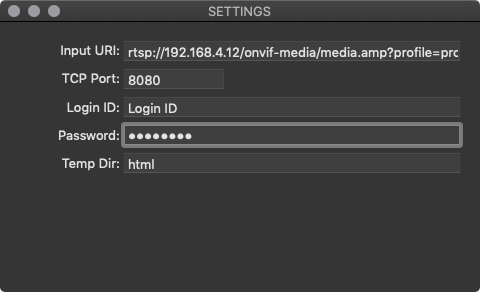

The Prefs Screen |

That’s where I am so far, this week.